Research Projects

Research Projects

Music Technology students don't just make music. They look at it through the lens of the scientific method, and research new and innovative ways to create it. Every semester they work in one of the labs in the Center for Music Technology to invent new technologies that redefine how we express ourselves through music.

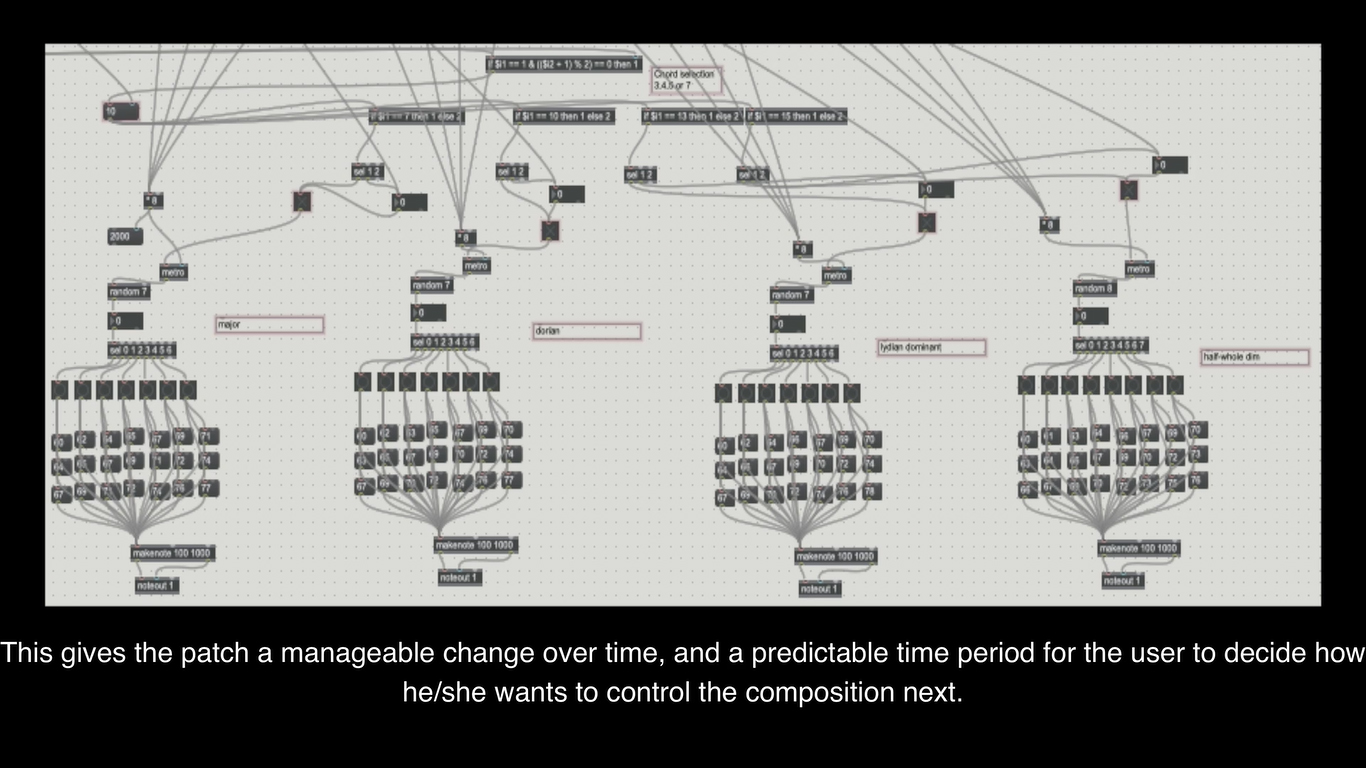

Flash Music

Project by: Matthew Arnold, Seth Holland, and Dallas McCorkendale

Flash Music is an SSVEP-based music interface that allows a user to control a music composition by focusing their visual attention on different corners of a screen.

Sky Above

Project by: Chad Bullard, Carson Myers, and Ally Stout

In this online collaborative performance with Chad Bullard and Carson Myers, Ally Stout uses electromyography to control the balance between voices.

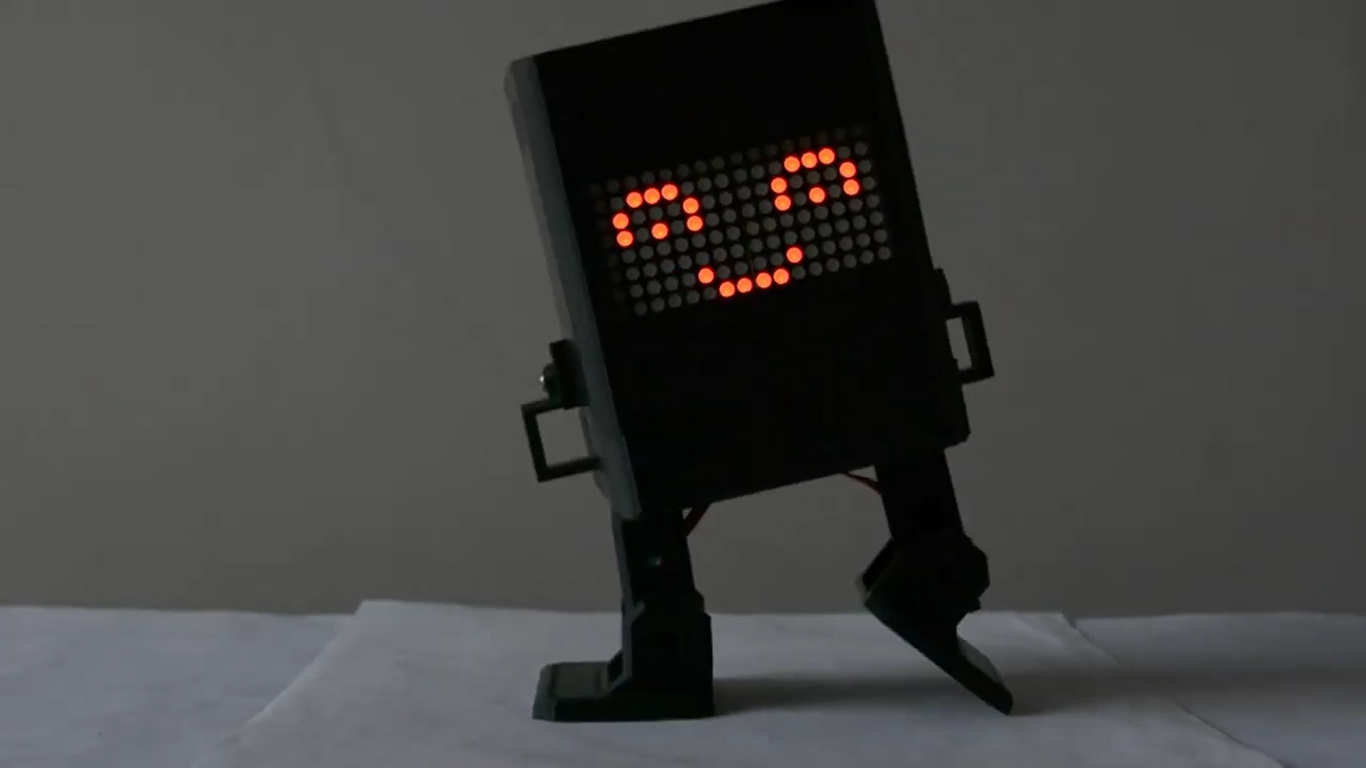

Design and Build of a Robot for Emotional Interaction Study

Project by: Rishikesh Daoo and Yilin Zhang

Rishikesh Daoo and Yilin Zhang collaborated to create a robot with emotional gestures, facial expression, and music timbre synthesis, which aims to generate emotional responses to human interactions.

Elevate

Project by: Lauren McCall

"Elevate" is a map-based interactive soundscape. It utilizes latitude, longitude, and elevation data in order to dynamically shape and spatialize music. As part of a repertory of geographically based sonification projects, Elevate explores the connection and uniqueness of topographical data from various locations.

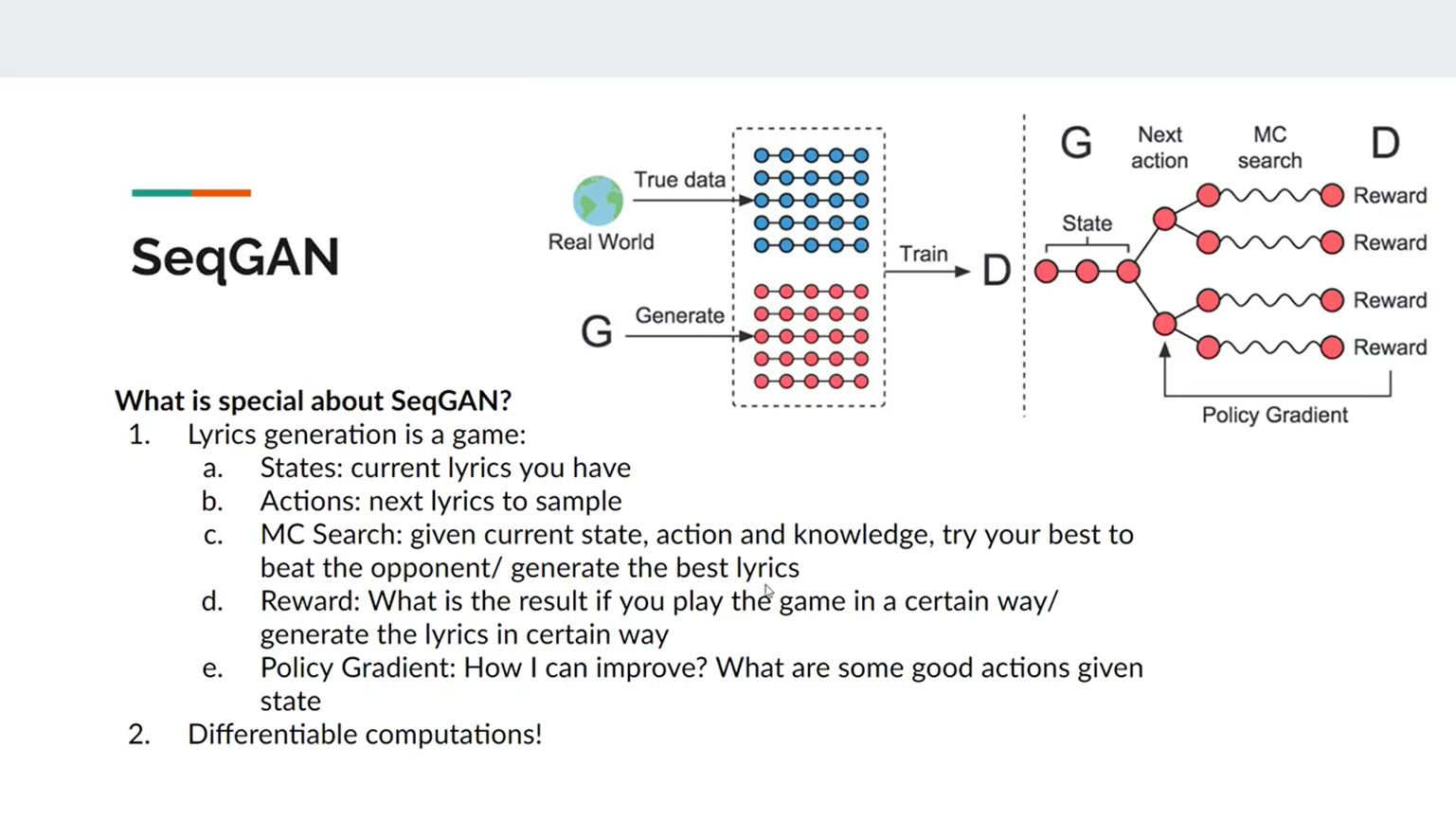

Melody Conditioned Lyrics Generation with SeqGAN

Project by: Yihao Chen

In this project, Yihao Chen builds a system that benefits musicians and singer-songwriters in terms of their lyrics writing. He proposes an end-to-end melody conditioned lyrics generation system based on Sequence Generative Adversarial Networks (SeqGAN), which generates a line of lyrics given the corresponding melody at the input.

Robotic Musician plays Carnatic Music on the Violin

Project by: Raghavasimhan Sankaranarayanan

A novel robotic violin player designed for performing Carnatic music. Using sophisticated mechatronics and music information retrieval techniques, the robot can play almost all the musical articulations and ornamentations including gamakas (Glissandos) with intonation.

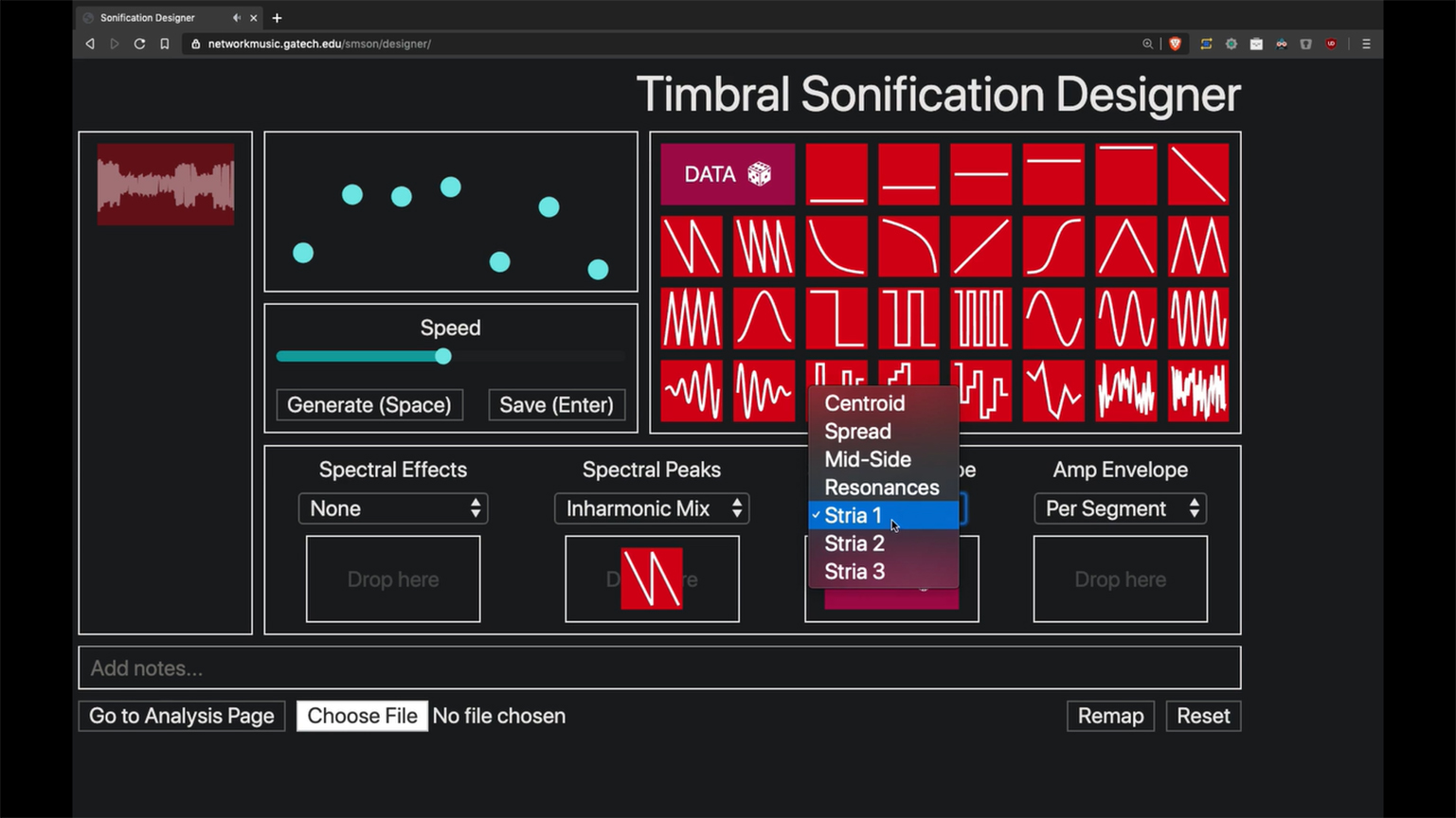

Timbral Sonification Designer

Project by: Takahiko Tsuchiya

The Timbral Sonification Designer is a web application for prototyping sonifications, the representation of data through various types of sound. It facilitates the creation of musical, changeable, and quantifiable sound expressions with data and arbitrary shapes mapped to multiple timbral dimensions.

Questions?

Contact Us