Latent Space Regularization for Music Source Separation

Latent Space Regularization for Music Source Separation

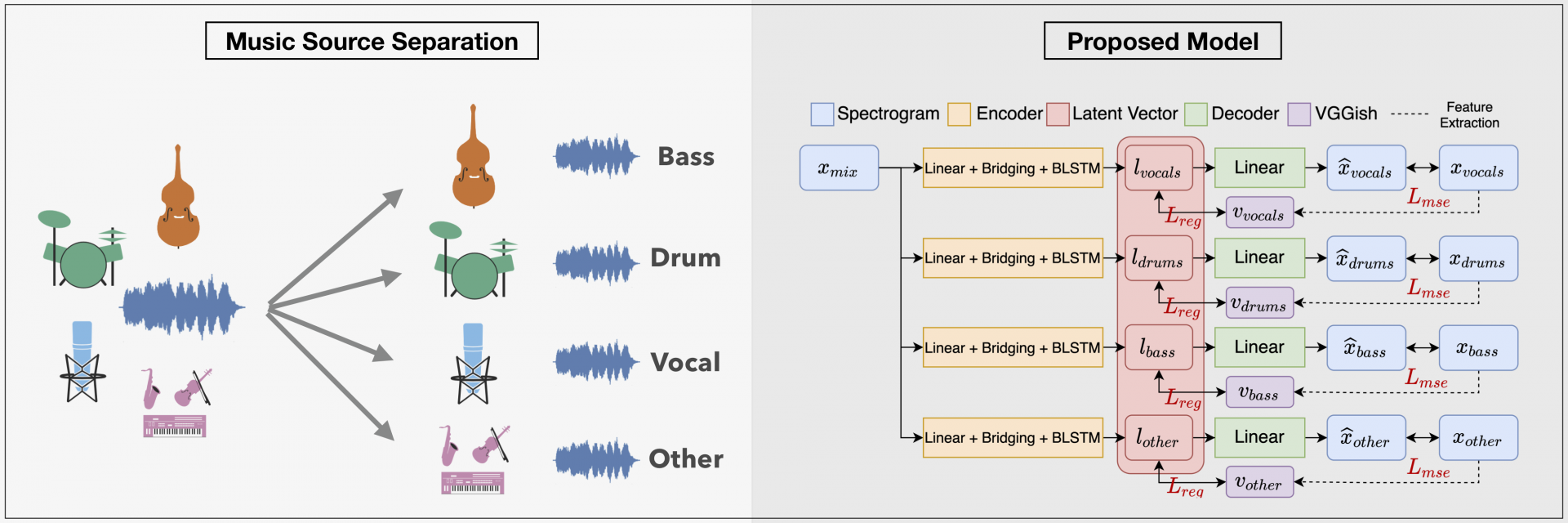

The integration of additional side information to improve music source separation has been investigated numerous times, e.g., by adding features to the input or by adding learning targets in a multi-task learning scenario. These approaches, however, require additional annotations such as musical scores, instrument labels, etc. in training and possibly during inference. The available datasets for source separation do not usually provide these additional annotations. In this work, we explore self-supervised learning strategies utilizing VGGish features for latent space regularization; these features are known to be a very condensed representation of audio content and have been successfully used in many MIR tasks. We introduce three approaches to incorporate the features with a state-of-the-art source separation model, and investigate their impact on the separation result.

Compared to the baseline system, our proposed methods can reduce the artifacts and the interference created by the model and improve the SAR and SIR scores. Latent space visualization and classification results show that the latent space has higher discriminative power after regularization. The proposed methods can be easily incorporated into other model architectures and adopted to other features.

Yun-Ning (Amy) Hung

Yun-Ning (Amy) is currently a Music Technology master student working with Prof. Alexander Lerch at Georgia Institute of Technology. Her research interests are at the intersection of machine learning, music signal processing and music information retrieval, especially developing deep learning models for music classification, instrument recognition and music source separation.

Questions?

Contact Us